Upgrade to the latest

google-cloud-bigquery and google-cloud-bigquery-storage

packages to download query results to a DataFrame 4.5 times faster

compared to the same method with version 1.16.0. If you aren't using the

BigQuery Storage API yet, use it to download your query results 15 times

faster compared to the BigQuery API. (31 times, if you don't mind using the

default Arrow to pandas conversion.)

This speed-up also works with the pandas-gbq library. Update,

the google-cloud-bigquery and

google-cloud-bigquery-storage packages and install

pyarrow, and set the use_bqstorage_api parameter to

True.

Code samples

To use the faster method to download large results, use the BigQuery Storage

API from your Python programs or notebooks.

Before you begin

- Enable the BigQuery Storage API: https://console.cloud.google.com/apis/library/bigquerystorage.googleapis.com

- Install the latest versions of the necessary Python packages (including

dependencies).

Steps for conda:

conda install --channel conda-forge 'google-cloud-bigquery>=1.17.0' \

'google-cloud-bigquery-storage>=0.7.0' \

'pandas-gbq>=0.10.0'

Steps for pip:

pip install --upgrade google-cloud-bigquery

pip install --upgrade google-cloud-bigquery-storage

pip install --upgrade pyarrow

pip install --upgrade google-cloud-core

pip install --upgrade google-api-core[grpcio]

From a notebook environment, the steps are the same, just prepend ! to call to the shell. For example:

!pip install --upgrade google-cloud-bigquery

Using pandas-gbq

import pandas_gbq

sql = "SELECT * FROM `bigquery-public-data.irs_990.irs_990_2012`"

# Use the BigQuery Storage API to download results more quickly.

df = pandas_gbq.read_gbq(sql, use_bqstorage_api=True)

Using the BigQuery client library

import google.auth

from google.cloud import bigquery

from google.cloud import bigquery_storage_v1beta1

# Create a BigQuery client and a BigQuery Storage API client with the same

# credentials to avoid authenticating twice.

credentials, project_id = google.auth.default(

scopes=["https://www.googleapis.com/auth/cloud-platform"]

)

client = bigquery.Client(credentials=credentials, project=project_id)

bqstorage_client = bigquery_storage_v1beta1.BigQueryStorageClient(

credentials=credentials

)

sql = "SELECT * FROM `bigquery-public-data.irs_990.irs_990_2012`"

# Use a BigQuery Storage API client to download results more quickly.

df = client.query(sql).to_dataframe(bqstorage_client=bqstorage_client)

What's next

New features

In addition to faster performance, google-cloud-bigquery

package version 1.17.0 adds a

RowIterator.to_arrow()

method to download a table or query results as a

pyarrow.Table

object.

Arrow provides a cross-language standard for in-memory, column-oriented data

with a rich set of data types. It is fast to create a pandas DataFrame from an

Arrow Table with the

to_pandas()

method. With the

fletcher library,

the Arrow Table can be used directly as the backing data structure of a

pandas extension array.

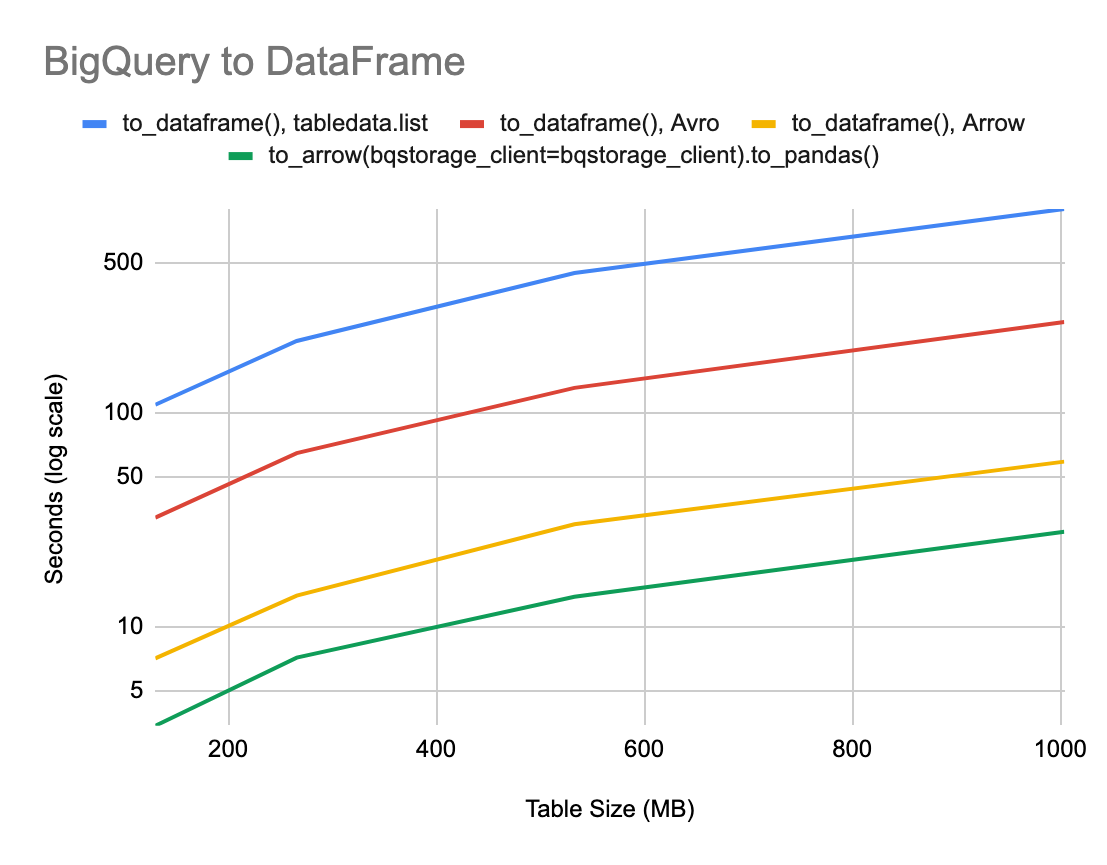

Better performance

We tested the performance of downloading BigQuery table data to pandas DataFrame

and Arrow Tables by sampling the

bigquery-public-data.new_york_taxi_trips.tlc_green_trips_*

tables.

|

Sample Rate

|

Table Size

|

Rows

|

|

6%

|

~130 MB

|

989,722

|

|

12.5%

|

~266 MB

|

1,980,102

|

|

25%

|

~533 MB

|

3,961,333

|

|

50%

|

~1 GB

|

7,916,643

|

We then timed how long it took to download these data to a pandas DataFrame from

a Google Compute Engine n1-standard-8 (8 vCPUs, 30 GB memory) machine. We used

the following methods:

-

A:

to_dataframe() - Uses BigQuery tabledata.list API.

-

B:

to_dataframe(bqstorage_client=bqstorage_client), package version 1.16.0 -

Uses BigQuery Storage API with Avro data format.

-

C:

to_dataframe(bqstorage_client=bqstorage_client), package version 1.17.0 -

Uses BigQuery Storage API with Arrow data format.

-

D:

to_arrow(bqstorage_client=bqstorage_client).to_pandas(), package version

1.17.0 - Uses BigQuery Storage API with Arrow data format.

|

|

A

|

B

|

C

|

D

|

|

~130 MB

|

108.5 seconds

|

32.3 seconds

|

7.1 seconds

|

3.4 seconds

|

|

~266 MB

|

215.3

|

64.5

|

13.9

|

7.2

|

|

~533 MB

|

447.0

|

130.1

|

30.0

|

13.8

|

|

~1 GB

|

890.7

|

264.1

|

58.9

|

27.7

|

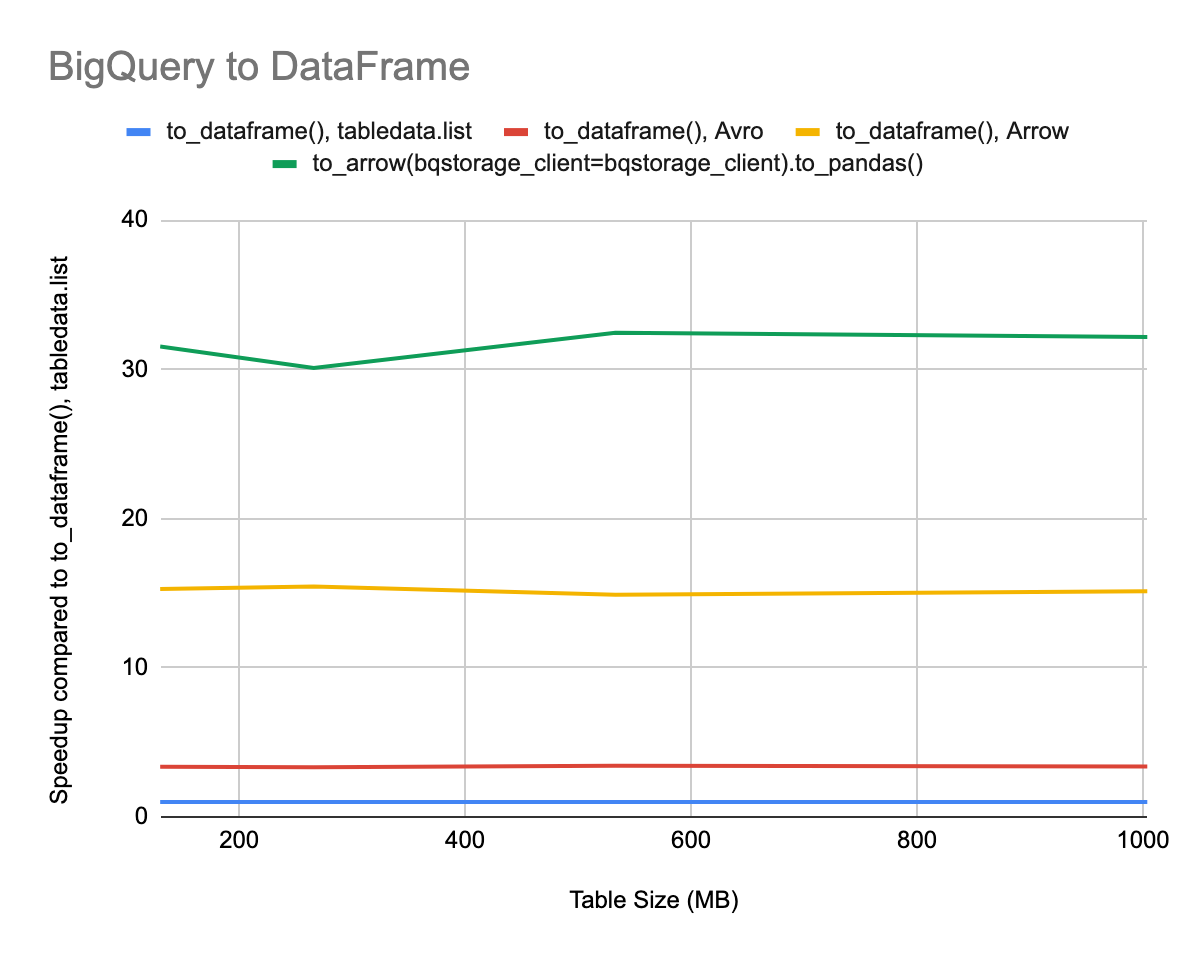

The speedup is quite stable across data sizes. Using the BigQuery Storage

API with the Avro data format is about a 3.5x speedup over the BigQuery

tabledata.list API. It's about a 15x speedup to use the

to_dataframe() method with the Arrow data format, and a 31x

speedup to use the to_arrow() method, followed by

to_pandas() with the BigQuery Storage API.

|

|

A

|

B

|

C

|

D

|

|

~130 MB

|

1x speed-up

|

3.4x speed-up

|

15.3x speed-up

|

31.5x speed-up

|

|

~266 MB

|

1x

|

3.3x

|

15.4x

|

30.1x

|

|

~533 MB

|

1x

|

3.4x

|

14.9x

|

32.4x

|

|

~1 GB

|

1x

|

3.4x

|

15.1x

|

32.2x

|

In conclusion, the fastest way to get a pandas DataFrame from BigQuery is

to call

RowIterator.to_arrow(bqstorage_client=bqstorage_client).to_pandas().

The reason for this difference is that to_dataframe() converts

each message into a DataFrame and then concatenates them into a single

DataFrame at the end. Whereas to_arrow() converts each message

to a RecordBatch and creates a Table at the end. The difference in time is

likely because pandas.concat(dfs) can make copies, where as

pyarrow.Table.from_batches() doesn't make any copies. Also,

pyarrow.Table.to_pandas() is often zero-copy.

Limitations

This BigQuery Storage API does not have a free tier, and is not included

in the BigQuery Sandbox. Because of these limitations, you are required to

have a billing account to use this API. See the BigQuery Storage

API pricing page for details.

The BigQuery Storage API does not yet read from small anonymous query

results tables.

There are restrictions on the ability to reorder projected columns and

the complexity of row filter predicates. Currently, filtering support when

serializing data using Apache Avro is more mature than when using Apache

Arrow.

Changelog

- July 29, 2019: Initial post

- September 25, 2019: Add code samples